Question:medium

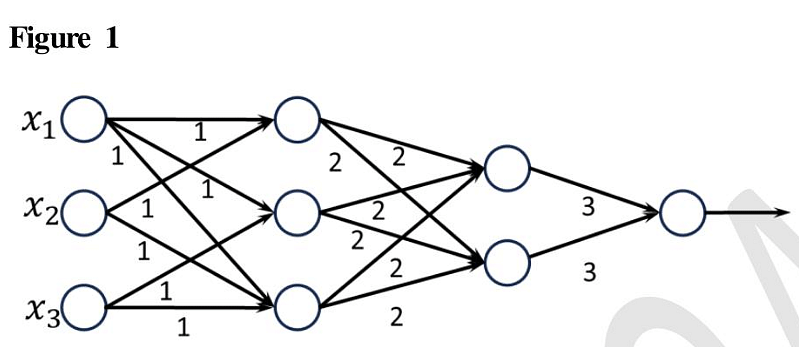

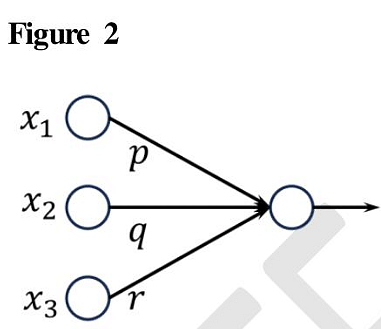

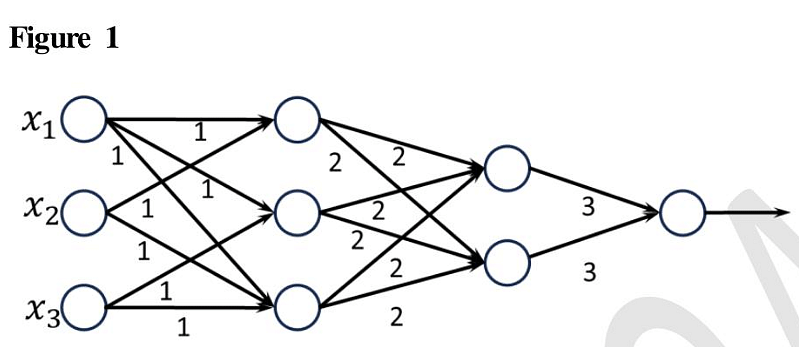

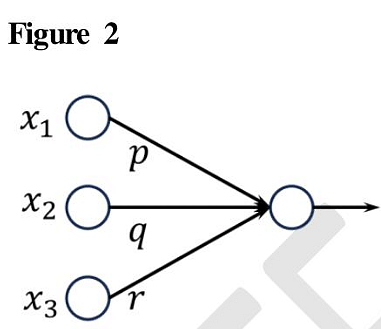

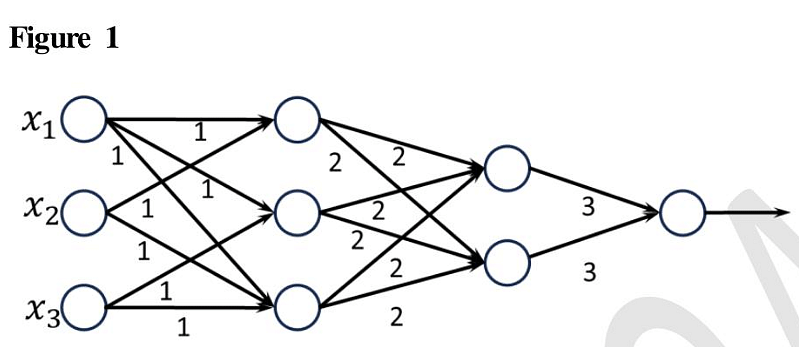

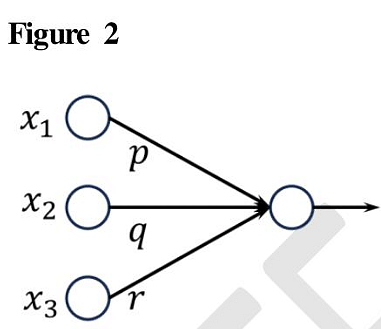

Consider the two neural networks (NNs) shown in Figures 1 and 2, with ReLU activation (ReLU(z) = max{0, z}, ∀z ∈ R). R denotes the set of real numbers. The connections and their corresponding weights are shown in the Figures. The biases at every neuron are set to 0. For what values of p, q, r in Figure 2 are the two NNs equivalent, when x1, x2, x3 are positive ?

Consider the two neural networks (NNs) shown in Figures 1 and 2, with ReLU activation (ReLU(z) = max{0, z}, ∀z ∈ R). R denotes the set of real numbers. The connections and their corresponding weights are shown in the Figures. The biases at every neuron are set to 0. For what values of p, q, r in Figure 2 are the two NNs equivalent, when x1, x2, x3 are positive ?

Updated On: Nov 25, 2025

- p = 36, q = 24,r = 24

- p = 24, q = 24,r = 36

- p = 18, q = 36,r = 24

- p = 36, q = 36,r = 36

Hide Solution

The Correct Option is A

Solution and Explanation

The validated choice is (A), with the values p = 36, q = 24, and r = 24.

Was this answer helpful?

1

Top Questions on Linear Algebra

- If \( f(x) \) and \( g(x) \) are two polynomials such that \( \phi(x) = f(x^3) + xg(x^3) \) is divisible by \( x^2 + x + 1 \), then:

- WBJEE - 2025

- Mathematics

- Linear Algebra

- Let \( A = \begin{pmatrix} 5 & 5\alpha & \alpha \\ 0 & \alpha & 5\alpha \\ 0 & 0 & 5 \end{pmatrix} \). If \( |A|^2 = 25 \), then \( |\alpha| \) equals to

- WBJEE - 2025

- Mathematics

- Linear Algebra

- If \( \text{adj } B = \lambda I \) where \( |\lambda| = 1 \), then \( \text{adj} ((Q^{-1} B P^{-1})) \) =

- WBJEE - 2025

- Mathematics

- Linear Algebra

- Suppose \( \alpha, \beta, \gamma \) are the roots of the equation \( x^3 + qx + r = 0 \) (with \( r \neq 0 \)) and they are in A.P. Then the rank of the matrix \( \begin{pmatrix} \alpha & \beta & \gamma \\ \beta & \gamma & \alpha \\ \gamma & \alpha & \beta \end{pmatrix} \) is:

- WBJEE - 2025

- Mathematics

- Linear Algebra

- Want to practice more? Try solving extra ecology questions todayView All Questions